Sign up in 30 seconds.

No credit card.

No risk.

No download.

Web Server Performance Benchmarks

G-WAN Rules in Static Benchmark Test Battery

Over at his blog Spoot!, Nicolas Bonvin recently posted two summaries of the great work he's done benchmarking how well various open-source and free Web servers dish up static content under high loads. Bonvin, a PhD. student at the …cole polytechnique fÈdÈrale de Lausanne (EPFL in Switzerland, specializes in high-volume distributed data systems, and brings considerable expertise and real-world experience to bear in designing his tests.

First Round of Performance Testing

In his first post, Bonvin laid out the evidence he'd accumulated by running benchmark tests against six Web servers: Apache MPM-worker, Apache MPM-event, Nginx, Varnish, G-WAN, and Cherokee, all running on a 64-bit Ubuntu build. (All Web servers used, save for G-WAN, were 64-bit.) This first set of benchmarks was run without any server optimization; each server was deployed with its default settings. Bonvin measured minimum, maximum, and average requests per second (RPS) for each server. All tests were performed locally, eliminating network latency from the equation.

On this initial test battery, G-WAN was the clear winner on every conceivable benchmark, with Cherokee placing second, Nginx and Varnish close to tied, and both strains of Apache coming in dead last. As Bonvin notes, it wasn't even close. G-WAN, a small Web server built for high performance, completed 2.25 more requests per second than Cherokee (its closest competitor), and served a whopping 9 to 13.5 more requests per second than the two versions of Apache.

Performance Testing After Configuration Tuning

That's all well and good...but how did G-WAN perform when the other servers could be configured and optimized to serve a high volume of static files? For this second battery of tests, Bonvin consulted with developers and system administration experts for each of the various servers to obtain an optimum configuration. (Some servers, such as Apache Traffic Server and G-WAN, boasted that they required no additional configuration.) He then tested each server with both two worker processes and four worker processes, measuring requests per second (mix/max/average), server CPU utilization, and server memory usage. For this second round, Bonvin tested Apache Traffic Server in lieu of Apache MPM-worker and MPM-event, and dropped Cherokee from the test run. (He later ran Cherokee against G-WAN and Nginx; it didn't fair well.)

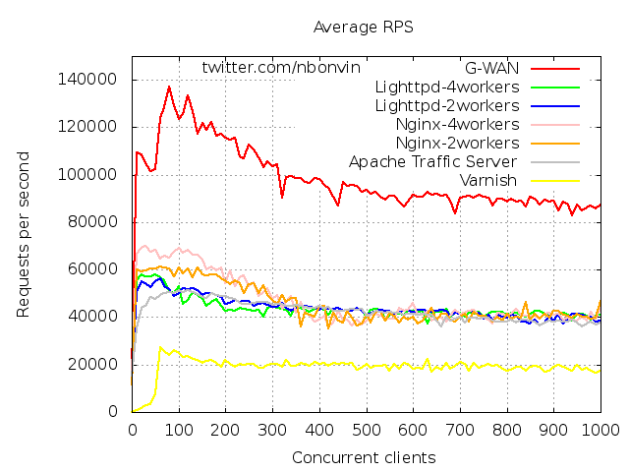

The results were both different and similar. G-WAN continued to outperform the pack, finishing well ahead of its next closest rival, Nginx. Apache Traffic Server faired better than Apache MPM-worker and MPM-event did in the previous tests. Surprisingly, Varnish Cache faired worse than it did previously, either matching or underscoring its maximum requests per second when Bonvin ran it out of the box.

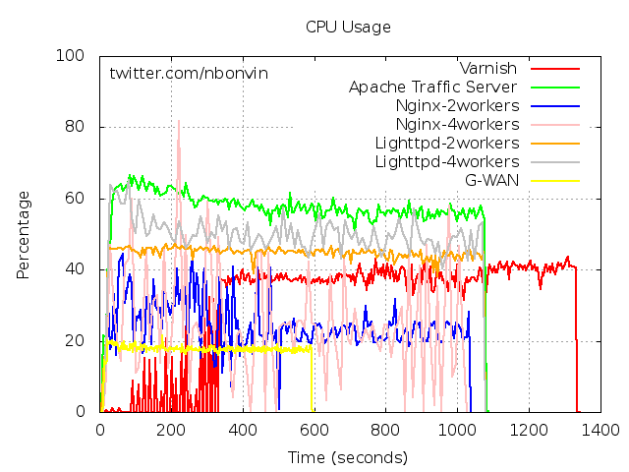

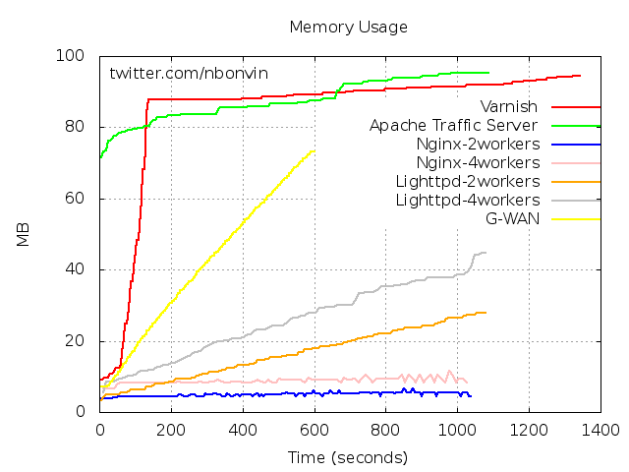

In terms of resources, G-WAN had the second-to-best CPU utilization, bested only by Varnish Cache. G-WAN's memory utilization wasn't great, but it grew linearly over time. The worst performers in this category, Apache Traffic Server and Varnish, consumed more memory, and consumed most of it within the first four minutes of operation.

Speed vs. Ease

Does this mean the world should flock to G-WAN as its Web server of choice? Certainly, G-WAN posts some amazing performance numbers. Much of the performance boost comes from being designed from the ground up for maximum performance on multi-core processors. But G-WAN also benefits from being what the G-WAN Web site calls a "programmer's application server." G-WAN eschews more modern programming languages and application frameworks like PHP and .NET, relying purely on ANSI C modules for extensibility.

Needless to say, finding expert C programmers isn't as easy as it used to be. That said, G-WAN may be just what's needed to fulfill the high performance requirements of modern Web sites. As noted in an interesting thread on serverfault.com, companies are looking not only to accommodate more users, but to make their server farms more green - which means using less power to fulfill a given request. And as Bonvin demonstrates with his two tests, when it comes to doing more with less, G-WAN is currently king.

Comments

The new version of

The new version of snorkelembedded, which has yet to be released, will be close to G-WAN's performance without the memory creep and it is an embedded solution that runs with in existing C/C++ solutions. In our testing, our new version consistently out performs G-WAN in real life network interactions using ab on a remote client over the same network that we ran the G-WAN tests; hence, our tests were not based on localhost benchmarking. Testing performed on our existing version by Pierre on G-WAN's site were not valid since we are NUMA aware and our library was not told about the number of CPUs. His test example instructed SnorkelEmbedded to use 2 threads instead of the recommended 6 for a 6 CPU system. We will be releasing our new version in a couple of weeks along with new benchmarks. Our new version includes our LINUX optimizations. Unlike G-WAN SnorkelEMBEDDED has been designed to run on any platform and as such we have only recently began optimization on a per platform basis. Each platform's optimizations will be released in subsequent releases.

A couple of corrections: 1)

A couple of corrections:

1) "G-WAN had the second-to-best CPU utilization, bested only by Varnish Cache."

This is actually incorrect. If you look at the chart:

Varnish's CPU usage (the READ line) goes up to 40% of the CPU for 1350 seconds.

By contrast G-WAN's CPU usage is below 20% and stops at 600 seconds.

2) G-WAN's memory usage is now far below Nginx's:

Nginx min:15,095,088 avg:16,120,333 max:17,065,442 Time:6746 sec RAM:12.07 MB

G-WAN min:47,583,292 avg:64,254,056 max:72,957,795 Time:1762 sec RAM: 4.78 MB

3) And, regarding Snorkel, Walter Capers, it's author wrote:

"You [G-WAN] are 10x faster than our latest version -- on LINUX."

http://forum.gwan.com/index.php?p=/discussion/comment/1755/#Comment_1755

Thanks for the corrections

Thanks for the corrections and new information. If you would like to write a guest blog post to share more data, please let me know at